Direct Mapping

The need for new visual Paradigms

for the Operation of the Internet of Things.

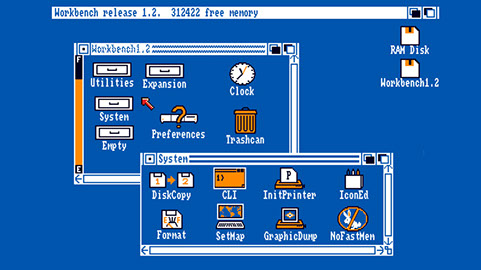

With the Xerox Star (1981), we are looking at the first commercially available computer showing a Graphical User Interface. The paradigms introduced with this computer are still present in our day by day interaction with data. A pointing device, a keyboard and a Graphical User Interface.

With the Xerox Star (1981), we are looking at the first commercially available computer showing a Graphical User Interface. The paradigms introduced with this computer are still present in our day by day interaction with data. A pointing device, a keyboard and a Graphical User Interface.

These Paradigms are also used when we interact with our connected devices. When we want to show the latest smart lighting system to our friends, we take our phone out of our poket, search for the App, then we search in a drop-down menu for the name that we assigned with the light and eventually we are able to operate some controls.

These Paradigms are also used when we interact with our connected devices. When we want to show the latest smart lighting system to our friends, we take our phone out of our poket, search for the App, then we search in a drop-down menu for the name that we assigned with the light and eventually we are able to operate some controls.

This proves useful when we just have a few devices.

This proves useful when we just have a few devices.

But how does this interface paradigm work, when these connected things are truly ubiquitous? What if all our lights and light switches, doors, entertainment system, kitchen appliances, garden tools and cars are smart and connected?

The amount of apps and drop down menus in your phone will become so numerous that it will become impossible for you to memorize what App and what menu name is connected with each device. In this case, you might find yourself standing in the kitchen and all you want to do is switch on a light in front of you. Was it light number 10?

The amount of apps and drop down menus in your phone will become so numerous that it will become impossible for you to memorize what App and what menu name is connected with each device. In this case, you might find yourself standing in the kitchen and all you want to do is switch on a light in front of you. Was it light number 10?

We operate the physical world with our hands. In 1000s of years we have formed shapes and affordances of objects that we can simply operate with our muscle memory, without using our mind to even think about it.

We operate the physical world with our hands. In 1000s of years we have formed shapes and affordances of objects that we can simply operate with our muscle memory, without using our mind to even think about it.

If this present scenario, operating the world with a touch screen, really promises an advantage over the paradigms we used to operate physical things in the past, it has to become as simple as operating a light switch.

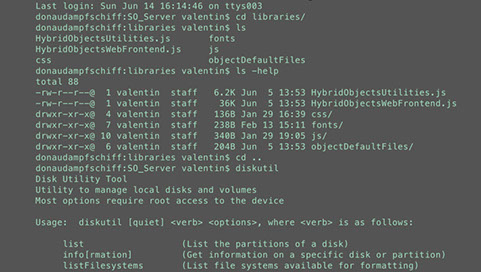

This problem is not a new one. We had a similar problem before. When we only had a text terminal to operate a computer, one needed to memorize all the commands in the mind. This is very effective for someone who has a proper training but it proves to be to complicated for the average person.

This problem is not a new one. We had a similar problem before. When we only had a text terminal to operate a computer, one needed to memorize all the commands in the mind. This is very effective for someone who has a proper training but it proves to be to complicated for the average person.

This is why Graphical User Interfaces were invented. Where commands needed to be memorized in one’s mind to operate a computer, now the memory about the commands made its way onto the screen. A user is now able to just click on the command represented as an icon on the screen instead of learning all possibilities upfront. The Graphical User Interface became self explaining with the desktop metaphor.

This is why Graphical User Interfaces were invented. Where commands needed to be memorized in one’s mind to operate a computer, now the memory about the commands made its way onto the screen. A user is now able to just click on the command represented as an icon on the screen instead of learning all possibilities upfront. The Graphical User Interface became self explaining with the desktop metaphor.

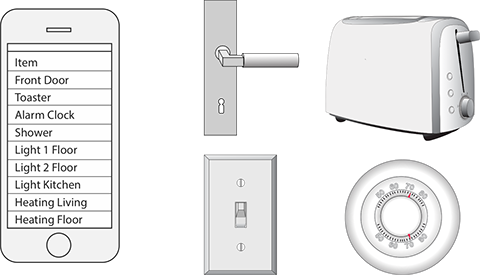

Something similar has to happen with all the things we want to connect. At this point a user need to memorize all the relations between apps, drop down menus and physical things. If the amount of things becomes too large, a user quickly reaches the limit of what can be memorized.

Something similar has to happen with all the things we want to connect. At this point a user need to memorize all the relations between apps, drop down menus and physical things. If the amount of things becomes too large, a user quickly reaches the limit of what can be memorized.

A true solution would be that the digital and the physical becomes truly merged and one would not be able to separate what is physical and what is digital.

A true solution would be that the digital and the physical becomes truly merged and one would not be able to separate what is physical and what is digital.

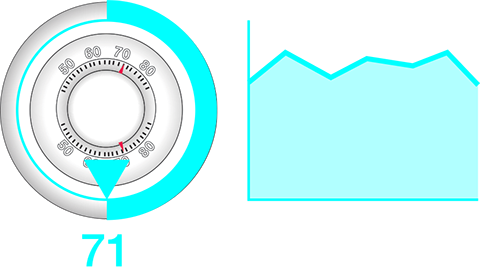

For now, we are not quite there yet, but we are able to make a first step. With Open Hybrid, you are able to directly map a digital interface onto a physical object. By doing so, you never need to memorize any drop down menu or App. With the Reality Editor you can just approach an object and interact with its directly mapped user interface. You can build hundreds of objects or even interact with spaces that you have never been to before. At no point do you need to retain a memory of how things are connected.